Facticity.AI Outperforms by 3X Eight Leading AI Search Engines in News Citation Accuracy, Announces Launch of X Bot @AskFacticity

New benchmark results show Facticity.AI achieves just a 14% incorrect citation rate— comparing favorably against eight leading AI LLM search competitors including ChatGPT (67%), Perplexity (42%), and Gemini (81%). While Perplexity Pro delivered slightly more correct citations, it produced triple the incorrect attributions compared to Facticity.AI. This breakthrough in trustworthy AI citations stems from Facticity.AI's epistemologically modest approach and focus on transparent explainability.

Superior Performance in Comprehensive Evaluation

In an in-house analysis of 200 benchmark entries conducted by its CTO A M Shahruj, Facticity.AI demonstrated exceptional accuracy in news citations, significantly outperforming eight leading AI search engines. The evaluation started by The Tow Center for Digital Journalism revealed striking incorrect citation rates (combining completely and partially incorrect attributions): ChatGPT Search (67%), Perplexity (42%), Perplexity Pro (45%), Deepseek Search (67.5%), COPILOT (42%), GROK-2 Search (77%), GROK-3 Search (94%), and Gemini (81%). These findings come at a critical time when concerns about AI-generated misinformation are growing, highlighted by the recent Columbia Journalism Review study that documented widespread citation failures across AI search tools. "Our benchmark results demonstrate that accurate attribution isn't just desirable — it should become the minimum standard for responsible AI systems," said Dennis Yap, the inventor of Facticity.AI. He is influenced by the Via Negativa Theology of the Greek Orthodox Church which he was baptized in and the writings of Nassim Nicholas Taleb.

Specialized Methodology Ensures Reliable Results

For this evaluation, Facticity.AI adapted its classification system — typically designed to categorize claims as True, False, or Unverifiable — to align with benchmark criteria. The modified system categorized results as "Found" (exact match with verified source), "Paraphrased" (variation of quote found), or "No Match Found" (no matching claim in assessed sources). This new Source Finder mode is available through the toggle button of https://app.facticity.ai/. Despite this adaptation, Facticity.AI maintained its core technology stack and agentic workflow for gathering, ranking, and verifying sources — the foundation of its exceptional performance.

Head-to-Head Comparison Reveals Significant Advantage

A detailed comparison with Perplexity Pro, often considered an industry leader, further highlights Facticity.AI's superior approach to accuracy. While Perplexity Pro delivered 105 correct citations, it also produced 90 incorrect citations — an alarming error rate of nearly 46%, which could undermine its feasibility for newsrooms. In contrast, Facticity.AI provided 95 correct citations with only 28 incorrect citations and 13 partially correct citations. This results in a substantially lower error rate of just 14%. The data demonstrates that while Perplexity Pro had slightly more correct citations in absolute terms, it introduced nearly triple the amount of misinformation through incorrect attributions.

The Science Behind Facticity.AI's Superior Performance

Three key factors contribute to Facticity.AI's exceptional citation accuracy:

1) A more conservative verification approach that prioritizes reporting "No Match Found" rather than risking incorrect attribution, substantially reducing misinformation spread.

2) A precision-focused scaffolded compound AI system that ensures cited sources are significantly less likely to contain errors or fabrications.

3) A balanced accuracy framework that optimizes for trustworthy citations instead of maximizing citation quantity regardless of correctness.

"In today's information ecosystem, incorrect citations aren't just annoying—they actively propagate misinformation," explained Dennis Yap who was also the Chief Journalist and Co-Editor of Temasek Times. "Facticity.AI's approach prioritizes responsibility: when we provide a citation, users can be confident it's as reliable as possible."

Looking Forward

As AI search tools become increasingly integrated into news production, consumption and fact-checking workflows, Facticity.AI's benchmark-leading performance establishes a new standard for citation accuracy. AI Seer is already is already in discussions with media outlets to plug Facticity.AI/writer into their Wordpress CMS systems. By combining technological innovation with a responsible approach to information verification, Facticity.AI is positioning itself as the trustworthy alternative in an increasingly crowded AI search market. For more information about Facticity.AI and its citation accuracy, visit www.Facticity.AI/blog .

Social Media Fact-Checker

With this week's launch of @AskFacticity on X (formerly Twitter), Facticity.AI brings its industry-leading citation accuracy directly to social media users and where misinformation thrives. You can also cut and paste long documents into Facticity.AI including ones generated by AI. Facticity.AI is the automatic social media fact-checker of Instagram Reels and TikTok and not just Youtube Videos, give it a try by cut and pasting the URL of your favorite media source. Consumers can now have reliable fact-checking right alongside their infotainment.

Dennis Yap

+65 83050508

www.aiseer.co

Please contact through LI (www.linkedin.com/in/dennisye) before trying to call.

Launch of @AskFacticity

https://x.com/AskFacticity/status/1901851537577795924

AI Seer's inhouse testing

Shahruj's in-house testing of the benchmarks taken from: https://www.cjr.org/tow_center/we-compared-eight-ai-search-engines-theyre-all-bad-at-citing-news.php

AI Search Has A Citation Problem

Image taken from "AI Search Has A Citation Problem" from the Columbia Journalism Review

Search Chatbots’ responses to our queries were often confidently wrong

Original Image without Facticity.AI taken from "AI Search Has A Citation Problem" from the Columbia Journalism Review

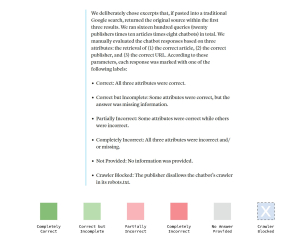

Explanation of the 6 categories used in the legend

Original Image without Facticity.AI taken from "AI Search Has A Citation Problem" from the Columbia Journalism Review Correct: All three attributes were correct. Correct but Incomplete: Some attributes were correct, but the answer was missing information. Not Provided: No information was provided